Who should own mocking in a microservices environment?

This article is an expanded version of Tom's reply to a similar discussion on the /r/microservices subreddit, which you can find here.

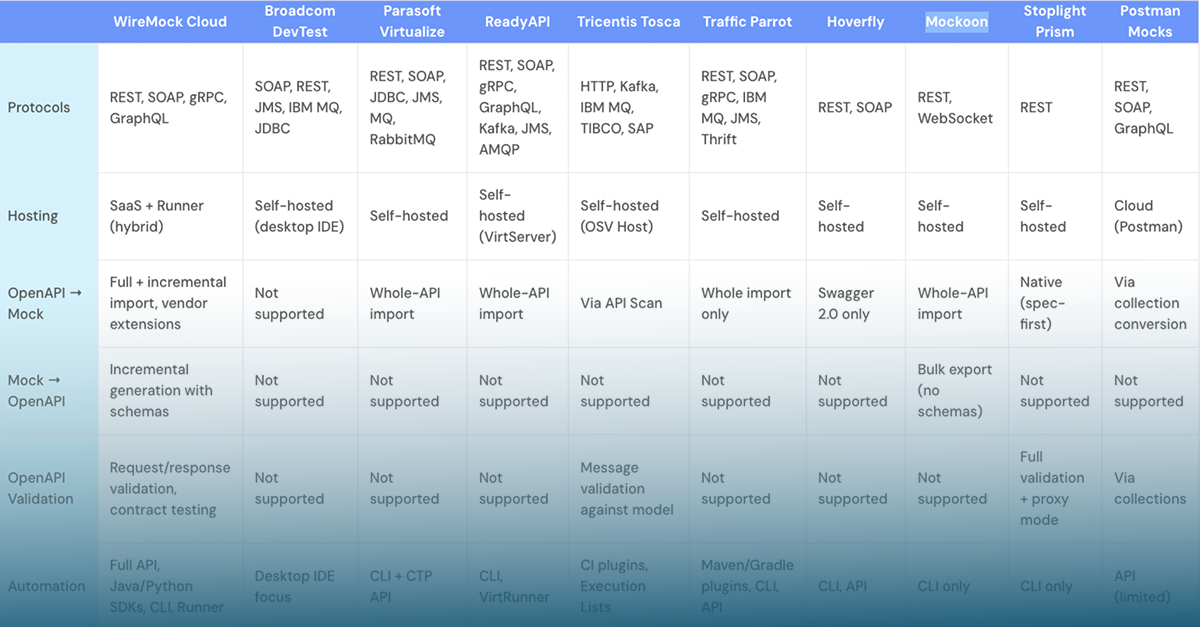

Who should be in charge of API simulation? Should it be the API producers—the teams that build and maintain the APIs—or API consumers, who integrate with the mocked services and depend on them? In environments where dozens or hundreds of microservices interact, the approach you choose significantly impacts developer productivity, software quality, and your overall efficiency.

Currently, most mocks are consumer-owned

The most common scenario today places the onus on API consumers: if you call an API, you build and maintain your own mock for it.

There are good reasons for taking this approach - mainly in that it keeps mock ownership with the people who will actually be using the mock, which allows them to design more practical mock endpoints and responses.

When building an application that consumes an API, you rarely need every endpoint. Your application might only use three endpoints out of twenty, with specific request patterns that matter to your use cases. Creating a mock that mirrors just these interactions is both efficient and pragmatic.

Let’s take a frontend team building a user dashboard. They don't need to mock the entire banking API - just the account and transaction endpoints with specific test data that exercises their UI states. They need account balances to sometimes be negative to test overdraft UI elements, and transactions with specific dates to test filtering logic. They can design a mock that does exactly that and it will probably suffice.

It’s a similar story when it comes to data formats, response variations, etc. — as a consumer, you know exactly what you need for your tests, and can create exactly that. This helps keep your mock-reliant tests focused and meaningful.

But while this makes sense at the team level, it can get problematic at the organizational level.

The hidden costs of consumer-owned mocks

The first major issue appears when APIs change frequently. When producers update their APIs, all consumer mocks immediately become outdated. For example, we can imagine a scenario where a payment system might pass all tests but break in production because an API provider added a required authentication header that wasn't accounted for in the mocks.

This maintenance burden gets heavier over time. Each API change forces every consuming team to update their mocks. At a Fortune 100 company we are working with, teams were spending up to 20% of their development time just keeping mocks current instead of building features (before they switched to WireMock).

The second problem is wasted effort. It's not unusual to see 5-10 different teams maintaining separate mocks of the same API, each with slight variations. This not only wastes engineering hours but also creates inconsistency when different teams' mocks behave differently.

And the more teams and services you have, the messier it gets…

Some solutions we've seen in the wild:

Automated API recording

API recording can capture real API interactions and generate mock definitions automatically. This works well for stable external APIs. For example, we can think of a logistics company where a weekly Jenkins jobs updates mock stubs with fresh payment processor interactions. When the gateway adds a new required header, their tests can catch it immediately. The downside of this method struggles with time-dependent APIs, as recorded data quickly becomes obsolete.

Mock validation against OpenAPI

In this model, API producers maintain specifications, while consumers build mocks validated against these specs. Teams can implement this by publishing OpenAPI specs to central repositories and configuring validation extensions during test runs. This catches structure mismatches while letting teams craft specific test data. The limitation here is that validation confirms structure but not business logic. Your API call might have the right fields but represent impossible scenarios.

Producer-built mocks

Some organizations have API producers create and distribute mock packages alongside client libraries. This works best for stable internal APIs with clear ownership. However, producer teams rarely understand all consumer testing needs, often resulting in minimal implementations that don't support edge case testing.

Of these options, OpenAPI validation typically offers the best balance between consistency and flexibility, though recording works well for stable external APIs.

WireMock’s approach to mock ownership

One of the main motivations behind WireMock Cloud is solving these types of conundrums, where unclear ownership leads to mock drift. Our approach is that effective API mocking isn't an either/or proposition. It requires collaboration between producers and consumers, and this is reflected in how WireMock Cloud guides team to work:

- Collaborative mock definitions: In WireMock Cloud, API producers and consumers work together around centralized mock definitions. Producers can publish their OpenAPI specifications to WireMock Cloud, establishing a single source of truth for API structure. Consumers can then create specific test scenarios without duplicating the foundational work.

- Intelligent recording: We’re continuously improving what we’re doing on the recording side in order to expand the range of cases where recordings can be made and produce durably useful results. This includes the introduction of local-first recording with the WireMock CLI, allowing developers to record actual interactions from their local environment, even when those APIs require complex authentication.

- Validation against reality: To tackle the drift problem, we've built validation directly into the platform. As tests run, WireMock Cloud can compare mock traffic against the latest OpenAPI specifications and flag inconsistencies. This catches structural incompatibilities early, before they lead to false test confidence. WireMock also provides differential testing with proxying, which allows you to route a percentage of test traffic to both the mock and the real API, then compare the responses to identify semantic differences.

- AI-powered mock maintenance: Using WireMock MCP, you can use coding assistants like GitHub Copilot to create and update mocks based on your codebase and requirements, and to update a mock when requirements change. This is a new capability, but offers a way out of the entire dilemma in the first place!

Our approach doesn't force all mocking into either a producer-owned or consumer-owned model. Instead, we're creating tools that let teams share responsibility in ways that make sense for their specific contexts. The producer provides structure, the consumer adds the specific test cases - and both spend less time duplicating each other's work.

/

Latest posts

Have More Questions?

.svg)

.svg)

.png)

.png)